Fillable Printable Load and Stress Test Plan

Fillable Printable Load and Stress Test Plan

Load and Stress Test Plan

John Wiley and Sons, Inc.

1

WileyPLUS E5 Load/Stress Test Plan

WileyPLUS E5

Load/Stress Test Plan

Version 1.1

Author: Cris J. Holdorph

Unicon, Inc.

John Wiley and Sons, Inc.

2

WileyPLUS E5 Load/Stress Test Plan

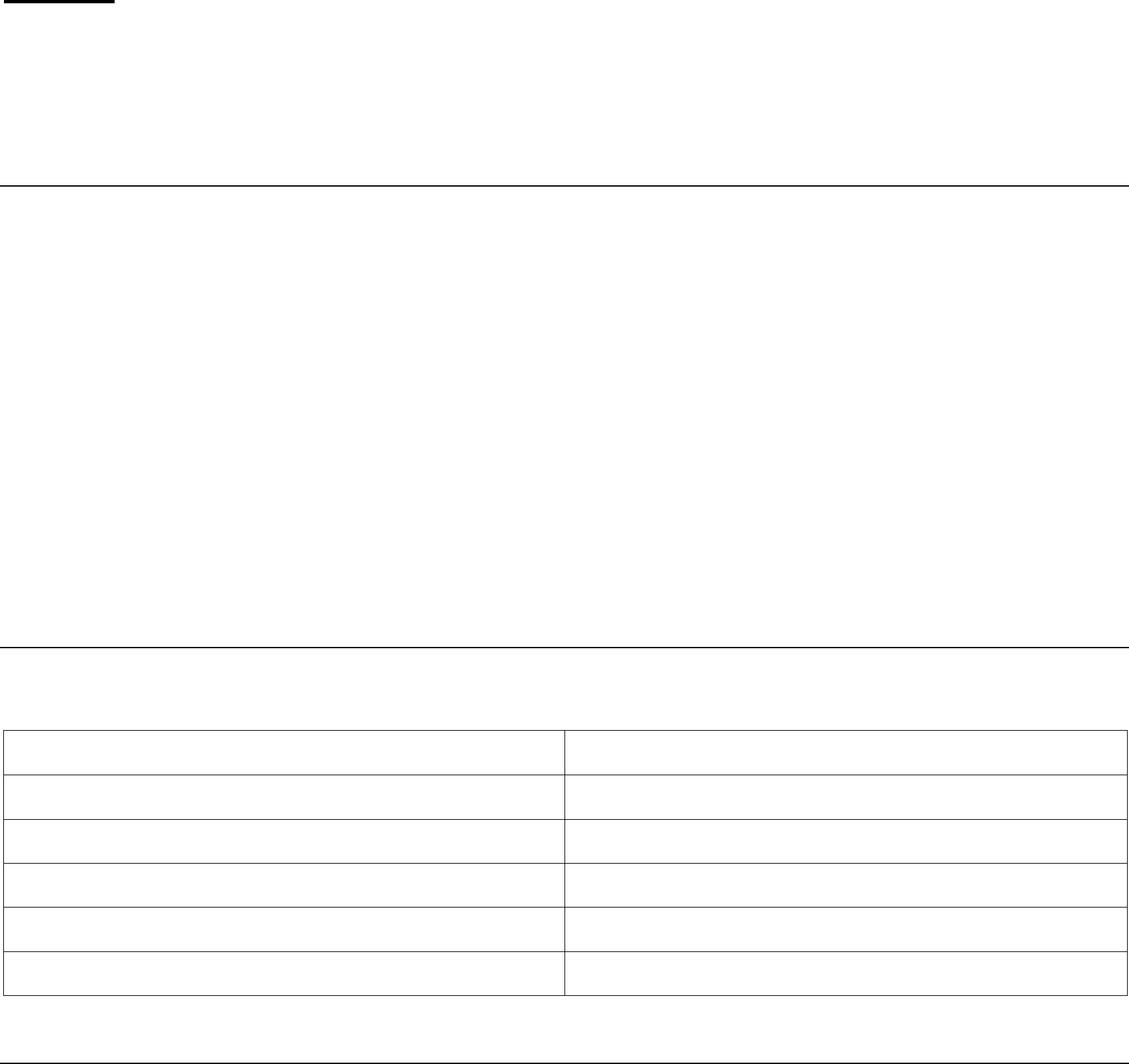

Audit Trail:

Date Version Name Comment

April 2, 2008

1.0 Cris J. Holdorph Initial Revision

April 9, 2008

1.1 Cris J. Holdor ph First round of revision s

John Wiley and Sons, Inc.

3

WileyPLUS E5 Load/Stress Test Plan

Table of Contents

TABLE OF CONTENTS............................................................................................................................................................................3

1. REFERENCE DOCUMENTS.....................................................................................................................................................4

2. OBJECTIVES AND SCOPE......................................................................................................................................................4

3. EXCLUSIONS ..............................................................................................................................................................................4

4. APPROACH AND EXECUTION STRATEGY........................................................................................................................4

5. LOAD/STRESS TEST TYPES AND SCHEDULES ..............................................................................................................4

6. TEST ME ASUREMENTS, METRICS, AND BASELINE......................................................................................................5

7. PERFORMANCE/CAP ABILITY GOALS (EXPECTED RESULTS) AND PASS/F AIL CRITERIA ..............................6

8. SOFTWARE AND TOOLS USED ............................................................................................................................................6

9. LOAD DESCRIPTIONS..............................................................................................................................................................6

10. CONTENT AND USER DATA PREPARATION....................................................................................................................7

11. LOAD SCRIPT RECORDING....................................................................................................................................................7

12. LOAD TESTING PROCESS......................................................................................................................................................7

13. TRAINING NEE DS ......................................................................................................................................................................8

14. SYSTEM-UNDER-TEST (SU T) ENVIRONMENT..................................................................................................................8

15. TEST DELIVERABLES..............................................................................................................................................................8

16. TEAM MEMBERS AND RESPONSIBILITIES.......................................................................................................................9

17. RISK ASSESSMENT AND MITIGATION ...............................................................................................................................9

18. LIST OF APPENDICES..............................................................................................................................................................9

19. TEST PLAN APPROVAL.........................................................................................................................................................10

APPENDIX 1 STUDENT TEST SCENARIO ............................................................................................................................11

APPENDIX 2 INSTRUCTOR TEST SCENARIO.....................................................................................................................15

APPENDIX 3 SINGLE FUNCTION STRESS TEST SCENARIOS ......................................................................................18

John Wiley and Sons, Inc.

4

WileyPLUS E5 Load/Stress Test Plan

1. Reference Documents

z E5 Performance Scalability Goals.xls

2. Objectives and Scope

The purpose of this document is to outline the environment and performance test plan for

benchmarking Sakai 2.5.0 core tools fo r use in WileyPLUS E5. In general the purposes of this

testing are:

z Validate the core Sakai framework and certain tools meet the mini mum performance

standards e stablished for this project. The following tools will be measured for

performance:

c Announcements

c Schedule

c Resources

c Gradebook

c Forums

c Site Info

z Establish a baseline for performance that can be u se d to measure any changes made to

the core Sakai framework and tools going forward.

The performance testing effort outlined in this document will not cover the following:

z Performance testing any new Sakai tools that are d eveloped

z Performance testing any changes to Sakai Tool s that are planned for WileyPLUS E5

z Performance testing any BackOffice applications or integrations

3. Exclusions

This test plan will not cover any functional or accuracy testing of the software being tested. This

test plan will not cover any browser or software comp atibility testing.

4. Approach and Execution Strategy

Sakai will be tested using an existing Wiley performan ce test process. This test plan will serv e as

the basis for Testware to create Silk Performer Test Scripts. These scripts will be run by Leo

Begelman using the Silk Performer software. Unicon, Inc. will watch an d measure the CP U

utilization of the web and database servers used during testing. Unicon, Inc. will analyze and

present the perform ance test result s to Wiley at the concl usion of the performa nce test cycle.

5. Load/Stress Test Types and Schedules

The following tests will be run:

z Capacity Test – Determines the maximum number of concurrent users that the

application server can support under a given configura t ion while maintaining an

acceptable response time and error rate as define d in section 7.

z Consisten t L oad Test – Long-running stress test that drives a continuous load on the

application se rver for an extended period of time (at least 6 hours). The main purpose of

this type of test is to ensure the application can sustain accepta ble levels of performance

over an extended period of time without exhibiting degradation, such a s might b e caused

by a memory leak.

z Single Function Stress Test – A test where 1 00 users perform the same fun cti on with

no wait times and no ramp up time. This test will help determine ho w the application

reacts to peri ods of extreme test in a very narrow area of the code. The areas that will be

tested in this fashion are outlined in Appendix 3.

z Baseline Test – At the conclusion of the Capacity Test and Consistent Load Test a

third test will be established with the goal to be a repeatable test that can be performed

when any portion of the system is changed. This test will not have the secondary goals

John Wiley and Sons, Inc.

5

WileyPLUS E5 Load/Stress Test Plan

the other two tests have, and will simply exist to be a known quantity rather then the

breaking point values the other tests are intere sted in.

Several test cycles may be requi red to obtain the results desired. The following test cycles are

intended to serve as a guideline to the different test executions that may be necessary.

1. Obtain a baseline benchmark fo r 120 users loggin g into the system over the course of 15

minutes and performing the scenarios outlined in Appendices 1 and 2. (Note: there

should be 118 students an d 2 instructors).

2. Use the result s from the first execution to make a gue ss as to how many user s the

system might support. On e possibility might be to run 1000 differe nt users through the

system for one hour, with a pproximately 240 concurrent users at a time.

3. If the second execution continues to meet the performance goals outlined in se ction 7,

continue to run new tests with increasi ng quantities of concurrent users until the

performance goals are n o longer met. It is desired that one server will su pport up to 500

concurrent users.

4. Assuming the maximum capacity is determined, a con siste nt load test will be run. The

consistent load test will use a number of concurrent users equal to 50% of the maximum

capacity. This test will run for 6 hours.

5. After both the maximum capacity and consistent load tests have been run, create a

baseline test that stresses the system without runni ng the maximum system load. The

baseline test is recommended to be run at 75% of the maximum capacity for a p eriod of

two hours.

6. Run each single functio n test listed in Appendix 3. If any test exceeds the maximum

number of server errors go al (see sectio n 7) then try to determine if any configuration

changes can be made to the system u nder test environment (see section 14) and run the

test again.

6. Test Measurements, Metrics, and Baseline

The following metrics will be collected

Database Server:

• CPU Utilization – Max., Avg., and 95

th

percentile. This data will be collected using the sar

system utility.

• SQL query execution time: The time required to execute the top ten SQL que ries involved

in a performance test run. This data will be collected using Oracle Stats Pack.

Application Serv er:

• Application Server CPU – Max., Avg., and 95

th

percentile. This data will be collected using

the sar system utility.

• Memory footprint: The m emory footprint is the peak memory consumed by the application

while running. This data will be collected using the Java Virtual Machine (JVM) verbose

garbage collection logging.

• Bytes over the wire (BoW): The bytes-over-the-wire is a count of the number of bytes that

are passed between the server and the client. There are two m ajor ways to measure this

value: initial action and cached scenario s:

• The initial action means tha t the user has no cached images, script, or pages on their

machine because the request is a fre sh request to the server. Therefore; that

request is ex pected to be more expensive.

• The cached mode means that images and pages are cached on the client with only

the dynamic information needing to be transmitted for these sub se quent actions.

• It is recommended a mix of initial Action and Cached scenarios be included in th e

performance test runs.

• This data will be collected using Silk Performer

Client:

John Wiley and Sons, Inc.

6

WileyPLUS E5 Load/Stress Test Plan

• Time to last byte (TTLB): This is what will currently be measured in the stress tests, as

opposed to user-perceived response time. Time to last byte measures the time between the

request leaving the client machine an d the last byte of the response being sent down from

the server. This time does not take in to account the scripting engine that must run in the

browser, the rendering, an d other functions that can cause a user to experience poor

performance. If the client-side script is very complex this num ber and the user perceived

response time can be wildly different. A user will not care how fast the response reaches their

machine (about the user perceived response time) if they cannot interact with the page for an

extended amount of time. This data will be collecte d using Silk Performer.

Network:

• Network Traffic: Network traffic analy sis is one of the most impo rtant functions in

performance testing. It can help identify unnecessary transmissions, transmi ssions which are

larger than expected, and those that can be improved. We need to watch network traffic to

identify the bytes over the wire being transmitted, the response times, and the concurrent

connections that are allowed. This data will be collected using the sar system utility.

7. Performance/Capability Goals (Expected Results) and Pass/Fail Criteria

The following are performa nce requirements (success criteria) for the performance test s:

1. The average response time (measu red by the Time to last byte metric) is less then 2.5

seconds

2. The worst response time (measured by the Time to last byte metric) is less then 30

seconds

3. The average CPU utilization of the database server is less then 75%

4. The average CPU utilization of the application server is less then 75%

5. Each blade server must be capable of handing 500 concurrent users

6. The maximum number of acceptable server errors, non HTTP-20 0 status codes on client

request s, will be less then 2% of all client requests.

8. Software and Tools Used

Component Software Version

Sakai Sakai 2.5.0 GA

Servlet Container Tomcat 5.5.25

Java Virtual Machine Sun Java Development Kit 1.5.0.14

Database Oracle 10g (version 10.?.?.?)

Load Test Tool Silk Performer

9. Load Descriptions

Each test outlined in section 5 will run with a ratio of 59 students to 1 instructo r. There is no

expected difference b etween users logging in for the first time or subseque nt logins given how the

data (outlined in section 10) will be created. The data set these tests will start with, will appear to

be in mid-course fo r all users.

There will be no ram p up time for any of the Single Function Stress Tests. The ramp up time

for all other tests, should be set to 1 user every 3 seconds. 120 users sho uld therefore be

John Wiley and Sons, Inc.

7

WileyPLUS E5 Load/Stress Test Plan

running withi n 6 minutes. The wait time between requests is conta ined in the test scenarios in

Appendix 1 and Appendix 2.

In order to place as mu ch stress on the system as possible with a small number of users, all

users should come from dif f erent worksites.

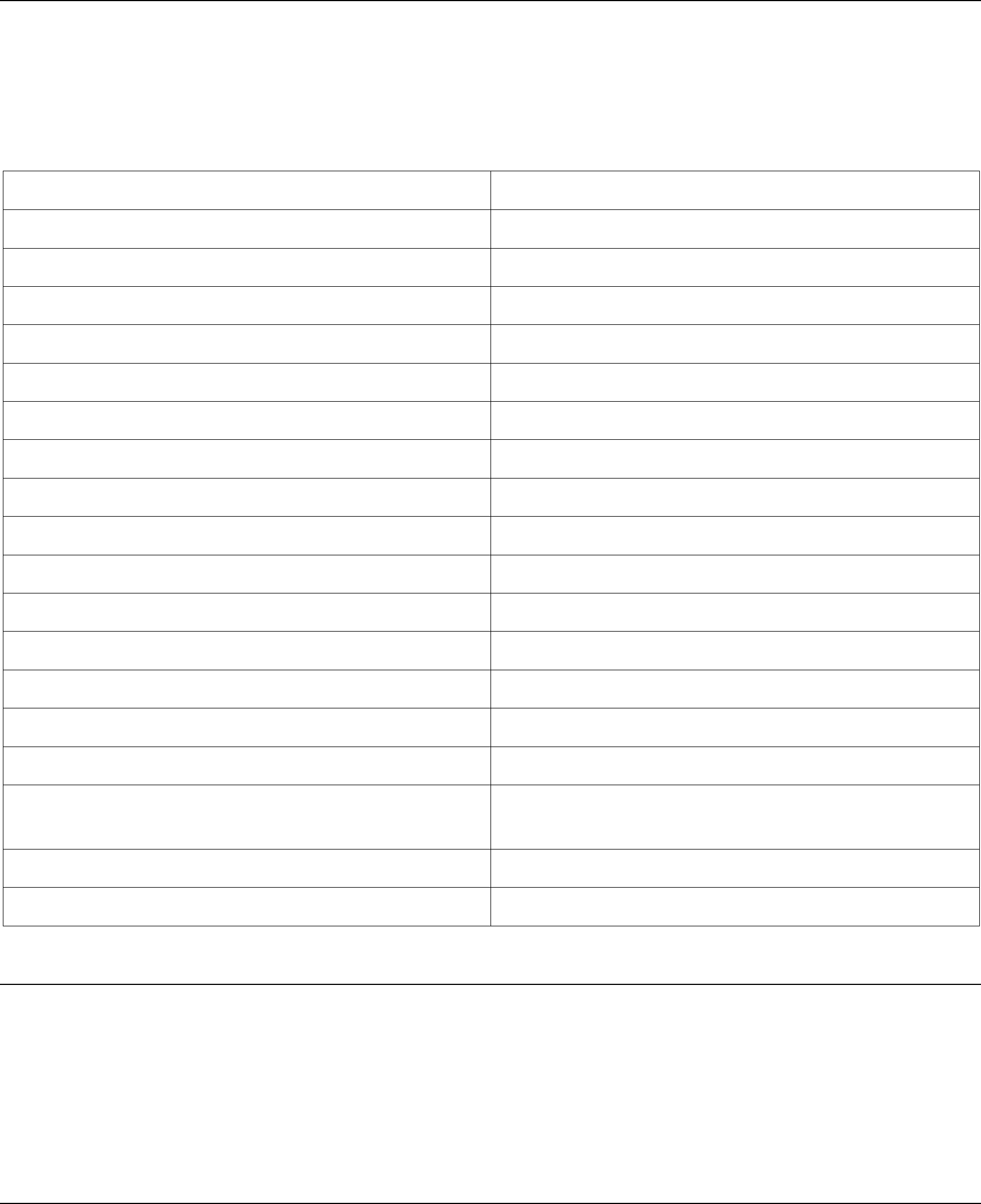

10. Content and User Data Preparation

The Sakai system will ne ed to be preloaded with data before the performance testing will be gin.

This data will be created us ing the Sakai Shell and Sakai Web Services. Once the data is

created it will be extracted from the database with a database dump. The following table

identifies several types of data that will need to be preload ed into the Sakai environment.

Type of Data Amount of Data

Users 93567

Students 92000

Instructors 1557

Administrators 10

Very Large Worksites 2

Large Worksites 5

Medium Worksites 50

Small Worksites 1500

Students to Instructors Ratio 59 to 1

Students per Very Large Worksite 1000

Students per Large Worksite 500

Students per Medium Worksite 250

Students per Small Worksite 50

Announcements per Wo rksite 13

Forum Topics per Worksite 1/3 of all forum posts in a worksite

Forum Posts per Worksite (spread a cro ss topics) ¼ students in worksite *

6.5

Columns (Assignments) in Grade book 13

Instructor Resourc e per Work site 20

11. Load Script Recording

Testware will create new Silk Perfo rmer Test Scripts base d on the two scenarios outlined in

Appendices 1 and 2. Appendix 1 rep re s ents the Student scenario, while Appendix 2 represents

the Instructor scenario. The Test Suite should be set up to accom modate a ratio of 59 students

to 1 instructor. Wait time should be included between each page request, so that the total time

for an activity is equal to the numbe r of page requests * 2.5 seconds + the total wait time.

12. Load Testing Process

This section details the load testing process that will be followed for all perform ance tests

conducted a s described in this test plan.

John Wiley and Sons, Inc.

8

WileyPLUS E5 Load/Stress Test Plan

1. Start data collection scripts

2. Stop the application

3. Remove any temporary files

4. Reset the dat abase and file system to known starting points

5. Start the application and run a quick sanity test to make sure each a pplication server can

successfully return login screen markup can successfully process a login request.

6. Request the Silk Performer scripts be started

7. Once the Silk Performer scripts have completed, stop the data collection scripts

8. Acquire any database specific data bein g collected

9. Collate the data for all the metrics specified in section 6, into one report.

10. Make the report available in the Wiley Portal

13. Training Needs

Testware will need to be trained on the use of the Sakai environment. The sce narios outlined in

Appendix 1 and Appendix 2 give some idea about which parts of Sakai will be used, ho wever,

more training will probably be required by the Test Script writers.

14. System-Under-Test (SUT) Environment

Specifying mixes of system hardware, software, mem ory, network protocol, ba ndwidth, etc.

• Network access variable s: For example, 56K modem, 128K Cable modem, T1, etc.

• ISP infrastructure variable s: For example, first tier, second tier, etc.

• Client baseline config urations

• Computer variables

• Browser variables

• Server baseline configurations

• Computer variables

• System architecture variables and diagrams

Other questions to conside r asking:

• What is the definition of “system”?

• How many other use rs a re using the same resources on the system under test (SUT)?

• Are you testing the SUT in its complete, real-wo rld environment (with load balan ces,

replicated databa se, etc.)?

• Is the SUT inside or outsi de the firewall?

• Is the load coming from the inside o r outside of the firewall?

15. Test Deliverables

The following test deliverables are expected as part of this performance testing effort.

z Test Plan – This Document

z Test Scripts – Silk Performer Te st Scripts to implement the scenarios outlined in

Appendix 1 and Appendix 2

z Test Results Data – The data resulting from the performan ce te sts run

z Test Results Final Report – The final report that documents and analyzes the results of

the performance tests that were conducted according to this test plan

John Wiley and Sons, Inc.

9

WileyPLUS E5 Load/Stress Test Plan

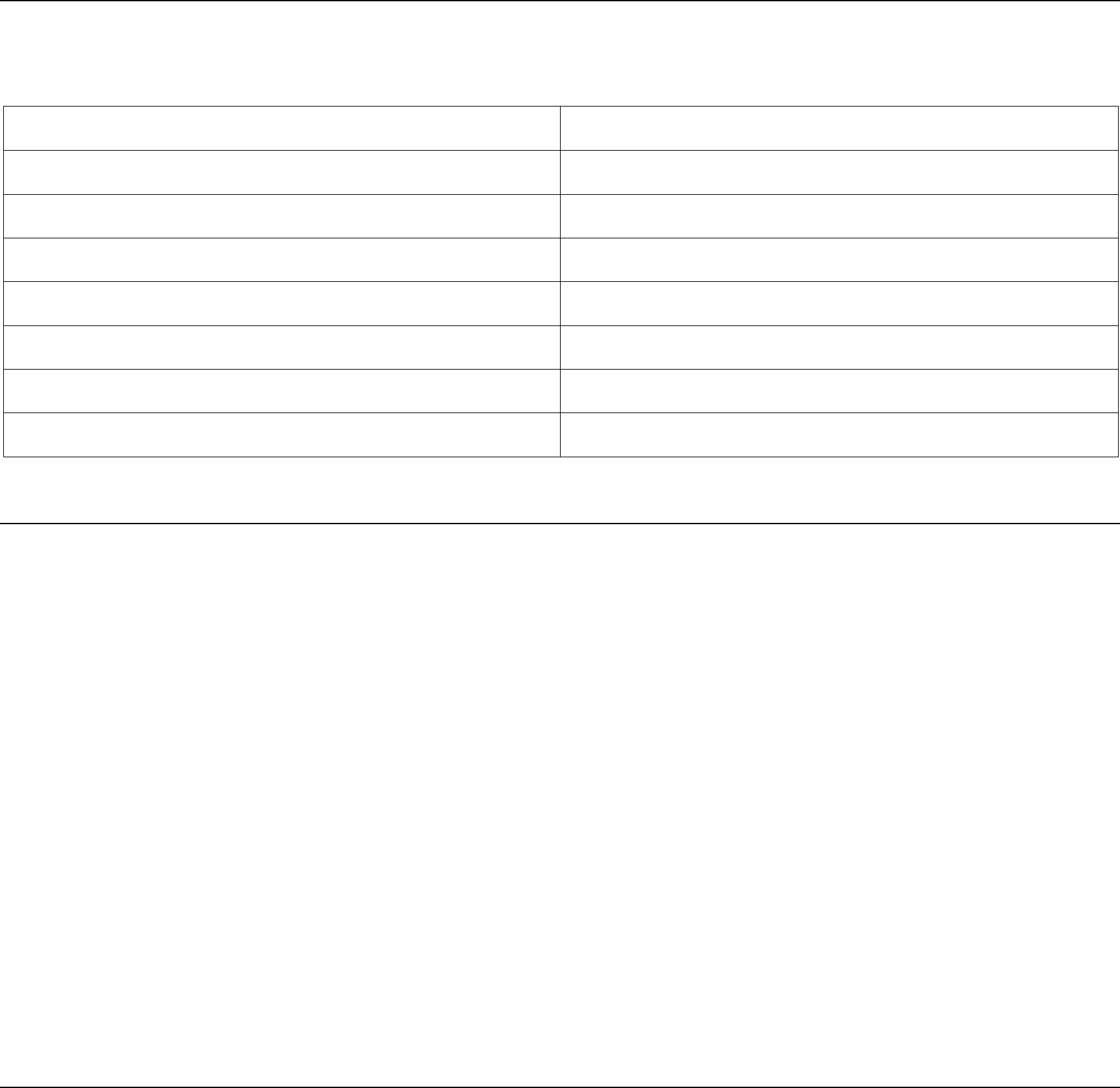

16. Team Members and Responsibilities

The following table defines the different team member responsibilities.

Responsibility Team Member

Test Plan Creation Cris Holdorph (Unicon)

Silk Performer Test Script Creation TestWare

Validation of Test Script Execution Leo Begelma n and Cris Holdorp h (Unicon)

Sakai Test Fixture/Data Generation Cris Holdorph (Unicon )

Silk Performer Test Scri pt Execution Leo Begelman

CPU Monitori ng and Test Orchestration Unicon

Database Loading and Analysis Alex Bragg (Unicon)

17. Risk Assessment and Mitigation

Business

Risk: Unsatisfactory Performance of Sakai

Mitigation: Conduct performance testin g of core Sakai at the beginning of the project. If Sakai

does not meet the goals established above, project management will have the most time possible

to adjust project plans.

IT

Risk: Limit on the number of virtual users available with Silk Performer

Mitigation: Test only one blade server per 500 virtual users available with Silk Performer

Risk: All Sakai tools needed for testing at this stage may not be available

Mitigation: Tests will be conducted aga inst the core tools that are in the Sakai 2. 5.0 release.

Where a tool that is need ed is not yet available, and place holder tool has been specified in the

test scenarios in Appendix 1 and 2. (e.g., Calendar will be used in place of Student Gatewa y for

this testing)

18. List of Appendices

z Appendix 1 – Student test scen ario

z Appendix 2 – Instru ctor test scenario

z Appendix 2 – Single function stress test scenarios

John Wiley and Sons, Inc.

10

WileyPLUS E5 Load/Stress Test Plan

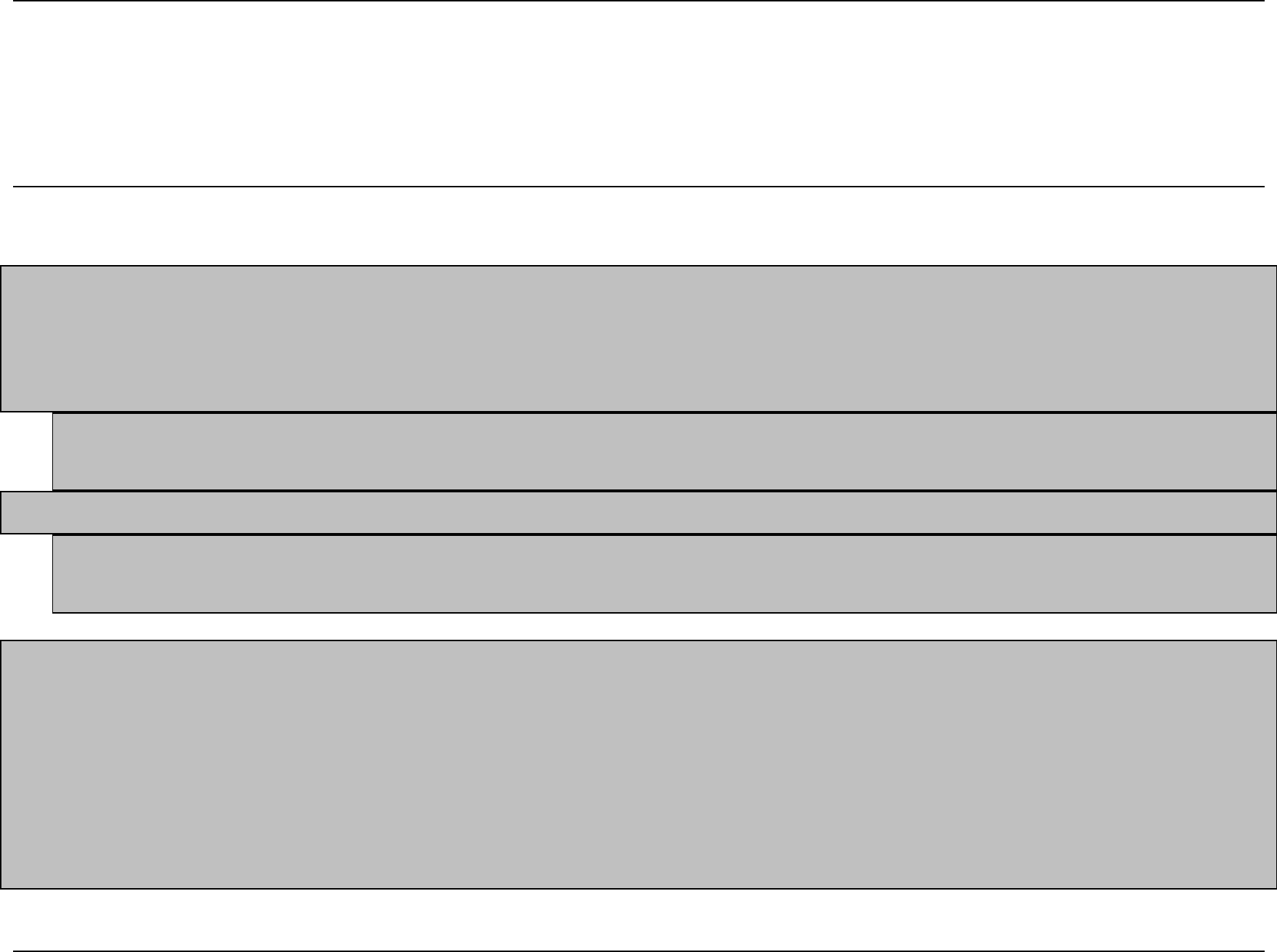

19. Test Plan Approval

Business Approval

__________________________________________________ _____________

Tom Speyer, Director, Enabling Technologies Date

IT Approval

__________________________________________________ _____________

Brian Librandi, Date

Testing Approv al

___________________________________________________ _____________

Leo Begelman, Software QA Manager, Hige r Education Date

John Wiley and Sons, Inc.

11

WileyPLUS E5 Load/Stress Test Plan

Appendices

Appendix 1 Student Test Scenario

1. Login

Tool/Service: Access Control

Time Spent on Task: 0.25 min

Notes: This test plan will assume Sakai local authentication.

Steps:

A. Enter User Id

B. Enter Password

C. Click the Login button

D. Click on a worksite tab for this student that represents a class and is not the My

Workspace tab

2. Read Announcements

Tool/Service: Announcements

Time Spent on Task: 0.5 min

Notes:

Steps:

A. Click on Announcements Page

B. Select an Announcement Title under Subject

3. Navigate to today's work

Tool/Service: Schedule

Time Spent on Task: 0.5 min

Notes: Sche dule will mimic the Student Gateway for now

Steps:

A. Click on Schedule Page in Worksite to View Events for Today

4. Add Event to Personal Site

Tool/Service: Schedule

Time Spent on Task:

Notes: Add Sched ule Event in Workspace

Steps:

A. Click on the Schedule Page in Personal Site

B. Click Add

C. Enter Title/Date/Start Time and Message

D. Click Save Event

5. Do Readings

Tool/Service: Resources

Time Spent on Task: 3 min

Notes:

Steps:

A. Click on Resources Page in Worksite

B. Select desired resource