Fillable Printable IT Balanced Scorecards Example

Fillable Printable IT Balanced Scorecards Example

IT Balanced Scorecards Example

IT Balanced Scorecards -

Suncorp’s journey to a contemporary model.

Author: Ian Ashley

IT Metrics & Reporting

Suncorp Limited.

Brisbane Australia.

1.

The Problem

Growing the business in an environment of increasing competition and a changing marketplace

challenges commercial organisations to focus on further reducing operating costs – “doing more

with less” - as a means to sustain shareholder value.

Restructures, transformations, mergers, acquisitions, disposals and off-shoring have become the

norm, and place intense focus on non-revenue earning divisions within the corporation to cut costs

and more strictly qualify new initiatives in terms of benefits realisation. The big-spending Y2K era

has intensified business focus on IT costs, and IT departments who continue to be measured largely

in financial terms are constantly “under the gun”.

Three years ago, Suncorp was restructuring for the 4

th

time since the 1996 merger of Suncorp

Building Society, Metway Bank and QIDC and the resulting transform ation process was again

focusing on reducing operating costs, particularly with the acquisition of GIO under way.

The problem for IT was that its performance was largely measured in financial terms and even

though it was already operating below industry average cost, the Business goals remained sharply

focused on share price and the pressure to further reduce costs was a perpetual situation. For IT, the

measures on performance were largely focused on:

• IT cost as % of group Operating Expenses;

• IT cost as % of Revenue

• Operating Expenses Variance to budget

• FTE numbers Variance to budget

Although IT maintained that System Availability and Security were also important, these aspects of

IT performance were “just expected” by the business.

Additionally, business assessment of IT delivery performance was based on perception of project

delivery, and unfortunately, what sticks in the mind of the Business are the projects that don’t go so

well - the 10% of the projects iceberg that sits above the water.

In short:

• What IT saw as high value had become a commodity to the business;

• What IT didn’t want to be seen as (a cost centre) was trapped in the headlights.

IT had to change the way it was perceived and the way it was assessed - it had to develop more

ways to show value to the business and take the focus off costs.

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 1

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

2.

The Journey Begins

The Business introduced Balanced Scorecards to assess performance and the General Executive of

IT embarked on a strategy that would see it adopting this approach to broaden the assessment base

using a concept familiar to the business.

An IT Metrics team was established in the 2002 transformation and a pilot balanced scorecard for

IT as a Business Unit was developed for the 2002/3 financial year (FY). Since then, many lessons

have been learned on metrics for IT, balanced scorecards and how management deal with the

information they receive.

The initial IT Balanced Scorecard, developed on the Kaplan & Norton model [1] has been reworked

to a more contemporary model and scorecards are now provided monthly to the IT General

Executive, 4 General Managers and 18 Managers across IT. They form a key input into annual

performance reviews of IT management against KPI’s.

In the complex environment that is Suncorp IT

1

, balancing scorecard timeliness, quality and value

(meaning and use) is a challenge. The most significant lessons learned with introduction of IT

Balanced Scorecards to-date in Suncorp are summarised, somewhat metaphorically:

1. It is a journey, somewhat like a roller coaster. Lots of ups and downs, twists and turns, but

you do get there, only to go around again, and again.

2. Many of the metrics will change, depending on where the hot spots are, but a core set will

seem to persist. Be prepared for change.

3. Management acceptance of bad news on scorecards should not be expected – particularly

near assessment time when bonuses are at stake. Be prepared for a challenge.

4. Data Quality begins at home, unfortunately everyone seems to be renting – not owning.

3.

Initial Steps – Kaplan & Norton

Meta Group was engaged to ramp-up the initiative, and proposed the following steps towards

implementation of Suncorp’s first IT Balanced Scorecard:

1. Choose a Structure for the Scorecard – a methodology and layout;

2. Select Appropriate Metrics that drive action and identify Ownership thereof;

3. Assess Capability and Quality for Data Capture and document the derivation of metrics

from that data;

4. Pilot the scorecard and assess each month. Build understanding in IT Management of the

metrics and associated actions where issues are highlighted. Improve Data Quality ;

5. Review metrics and targets annually;

6. Once established, link to KPIs to underpin corrective action on issues raised.

The involvement of Meta Group was a tactical move to jump-start the process with a scorecard

layout and “industry standard” set of metrics, given:

• our inexperience with balanced scorecards for IT at the time; and

• the complexity of the Suncorp environment.

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 2

1

Suncorp IT has over 900 staff working across 25 core systems involving over 2500 applicatio ns. It delivers around

11,000 releases per year and averages 40 concurrent pr o jec t s. Suncorp IT sup p orts t he co rp orat e back office and 3

business lines– banking, insurance and wealth management. Suncorp is Australia’s 6

th

largest bank and 2

nd

largest

general insurer. Head Office – Brisbane, Australia.

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

3.1

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 3

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

Step 1 – Structure

Suncorp and the Consultant from Meta decided to base the scorecard on a traditional Kaplan &

Norton model [1] with perspectives of:

• Finances

• Business Internal Processes

• Customers

• Learning & Growth (ie. Employees)

The main benefits cited by Kaplan and Norton [1] for this model were:

• focuses the whole organisation on the few key things needed to create breakthrough

performance;

• helps integrate various corporate programs, such as quality, re-engineering, and

customer service initiatives;

• breaks down strategic measures to local levels so that unit managers, operators, and

employees can see what's required at their level to roll into excellent performance

overall.

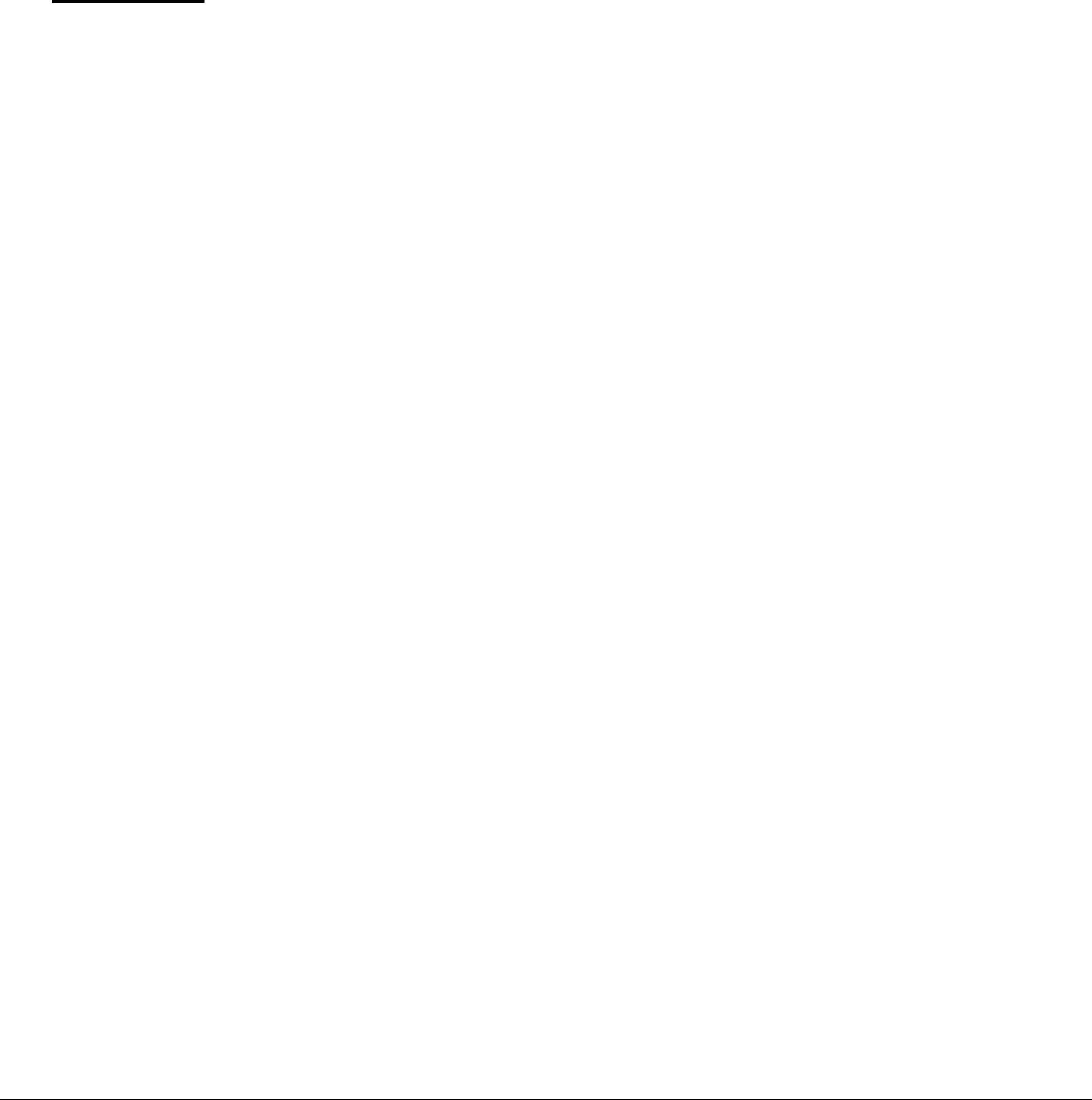

The structure for Suncorp IT Balanced Scorecard was agreed, based on the 4 traditional

perspectives and divided into 8 sub-categories as shown in Figure 1.

Finances Internal Processes

Customers Employees

Customer Satisfaction

Pr oject & Release P erfo rmance

Service Delivery Performance

Financials & Productivi ty Audit / Risks

Application Portfolio Quality

Employer of Choice

Figure 1 – Suncorp IT Balanced Scorecard model based on Kaplan & Norton

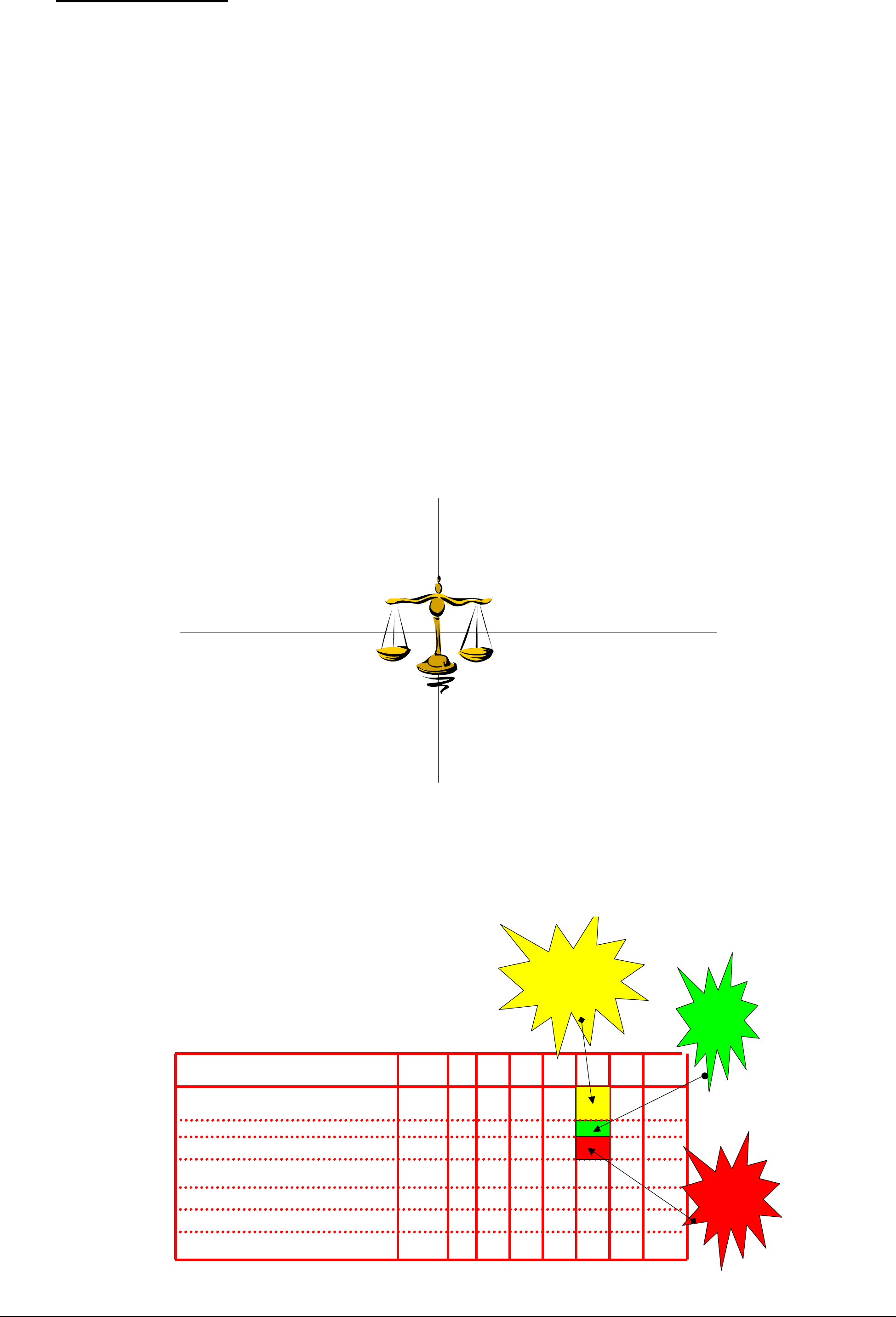

Various examples of scorecard layouts were reviewed and the initial scorecard was created similar

to the example as shown in figure 2.

Under

control

Warning

+/-5%

deviation

Issue

+/- 10 %

deviation

Example L ayou t

Reporting Month : June 2003

Service Delivery Perform ance Target Jan Feb Mar Apr Jun … . Trend

Sev 1 problems restored in SL A 9 5% 89% È

Sev 2 problems restored in SLA 90 % 88% Ç

Help Desk Fixed Fir st Contact 75% 61% Ç

etc.

Figure 2 – Scorecard example layout

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 4

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

3.2

Step 2 – Initial Selection of Metrics

Meta Group recommended an initial set of metrics that, based on their global experience:

• were used as a foundation in many IT Balanced Scorecards;

• had industry standard benchmarks available; and

• were applicable to Suncorp ITs business and environment.

Suncorp added a few that related to key initiatives and after tweaking the Kaplan & Norton model

the scorecard framework was set. (Refer to Figure 1 for structure, Figure 2 for example layout).

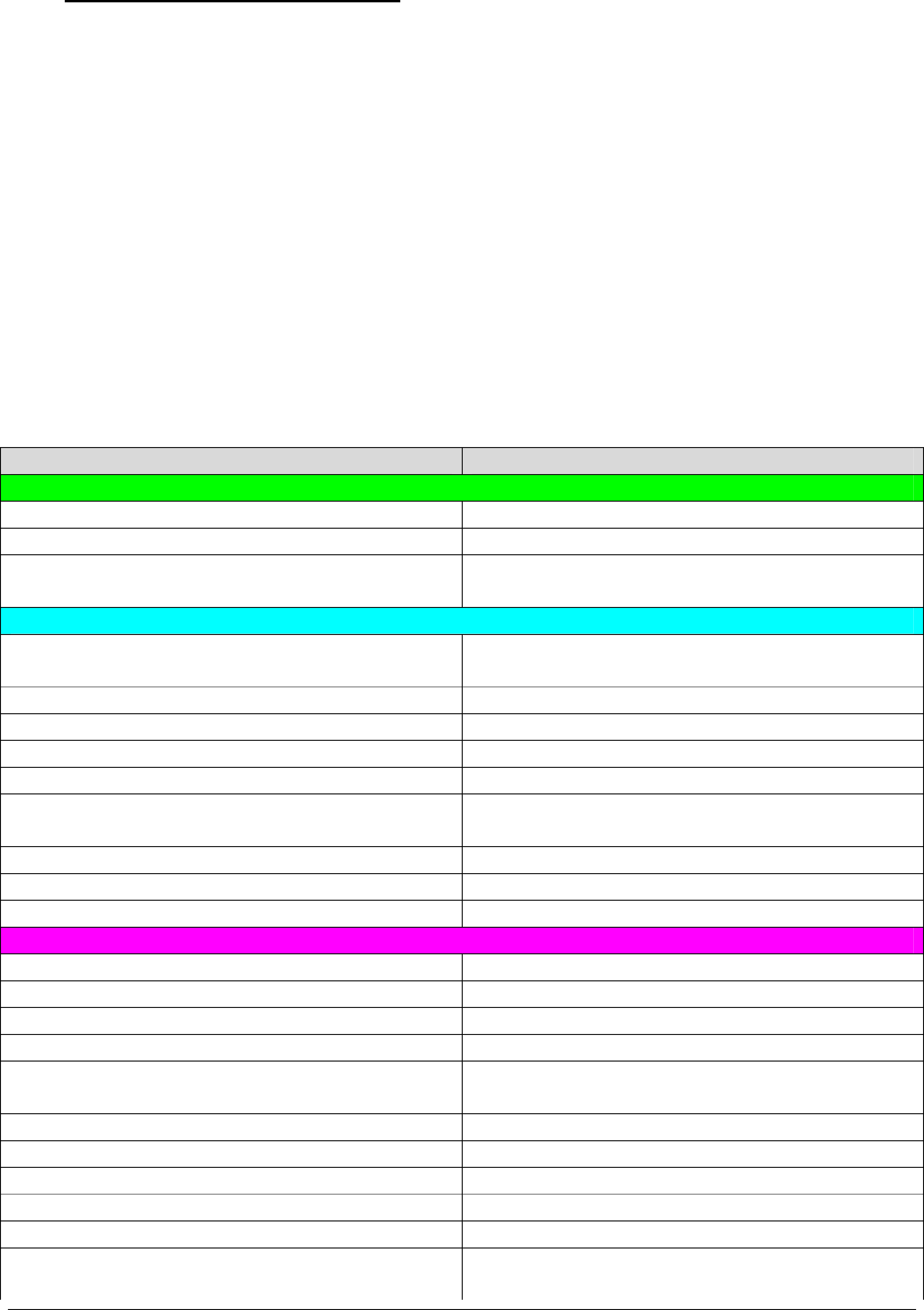

The initial metrics chosen were motivated as shown in Table 1 below. Although others were

considered (eg. Charge-back gap actual v forecast), this list provided a start point, was easy to map

to Kaplan & Norton’s model and external benchmarks were largely available.

Time would tell, and although several of the measures survived, several didn’t as they were either

perceived as not being aligned to stra tegic initiatives nor operational goals, or not ab le to be easily

related to business value of IT.

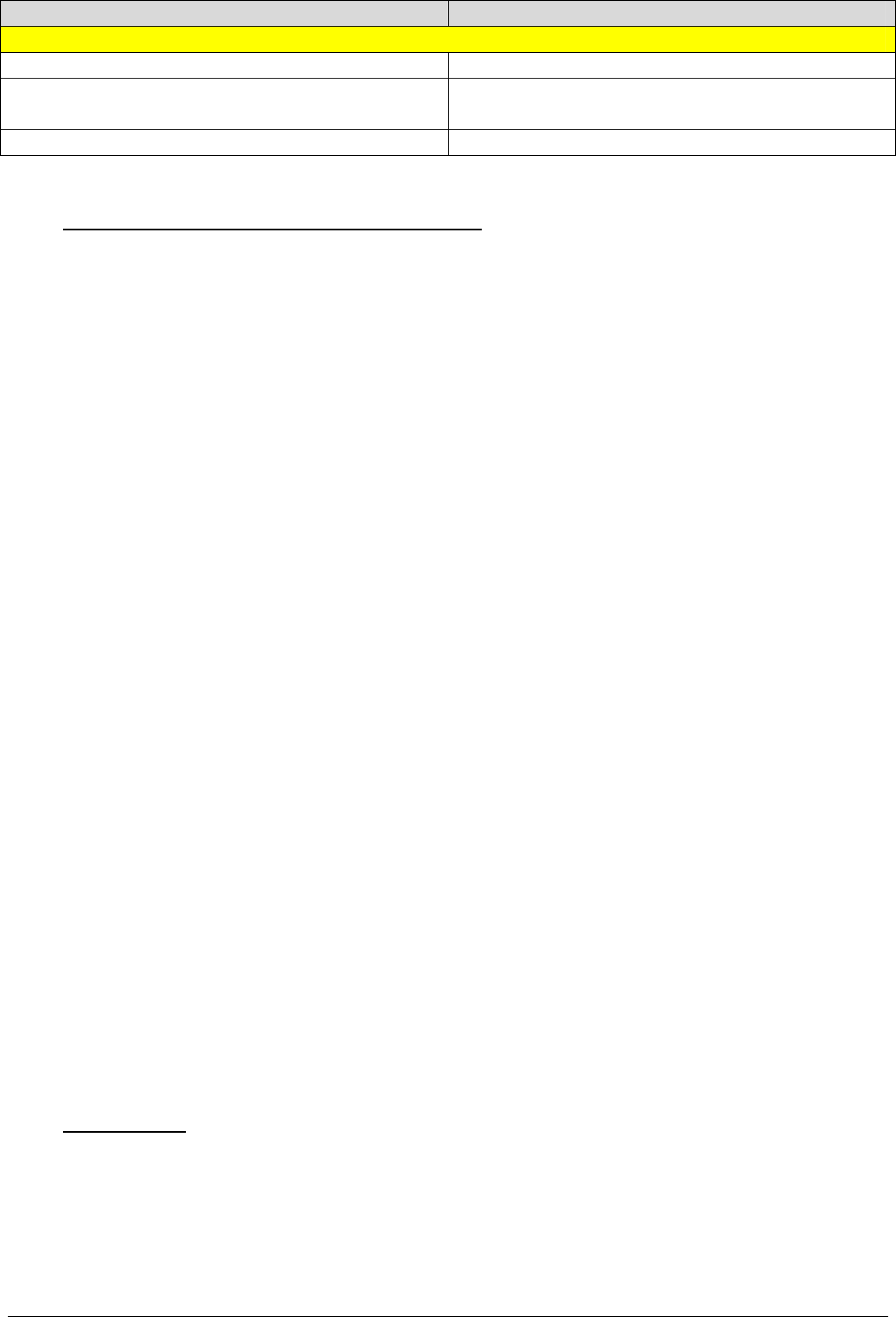

Metric Motivation

Finances

Actual Operating Expenses variance to budget Inescapable measure – budget

FTE variance to Budget Resources a significant driver of costs

TCO v Benchmarks Cost competitive on # FTE’s supporting various

platforms

Processes

Adherence to Architecture Applicability of frameworks and consistency of

use across IT

Architecture Reuse Development Efficiency and Consistency

Architecture Time to Market Timely facilitation of Development teams

Adherence to Policies Assess effectiveness of policies

Adherence to Service Level Agreements Measure of delivery as contracted

Changes Causing Downtime IT should not cause disruption to customers

through poor quality of work

Changes to Fix Same Problem Fix problems at first attempt

Changes Causing Other Problems IT should not cause problems for itself

Changes Implemented without Testing Quality assurance

Customers

Service Availability Services meet business need for availability

Customer Satisfaction Surveys Direct satisfaction measure

Problem Resolution – Sev 1 within SLA Critical incidents resolved as per service level

Problem Resolution – Sev 2 within SLA Major incidents resolved as per service level

Service Desk Fixed on First Contact Alignment to business need to address issues at

first point of contact

Service Desk Responsiveness < 60 secs Calls answered promptly

Service Request Provided by Date Required Delivery as per agreed service level

Projects & Releases Completed on Time Benefits streams on tap when expected

Projects & Releases Completed on Budget Hrs Costs of initiatives as quoted

Projects & Releases Started on Time Scheduled projects/releases start as planned

Pre-Project Investigation s Delivered on Time Enable business decisions to be timely for

approval of new initiatives

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 5

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

Metric Motivation

Employees

Turnover of High Performing Staff Retention of high performers

Career Portfolio Assessments Developed Capability assessment and career plan to show

there is a career future with Suncorp

Career Development Plans Actioned Attracting and growing staff

Table 1

3.3 Step 3 - Data Capture, Quality and Accuracy

Automated capture of data, as a by-product of processes is the ideal for delivery of metrics. Self-

reporting or forced collection compromises quality and reliability of the data, but there may not be

automated systems in place for capturing all data.

Core sources for Suncorp’s IT metrics are:

- HR;

- Financials (Peoplesoft);

- Service Desk/Configuration Management System for Calls, Problems, Service Requests,

Changes, Releases (Solve Central);

- a Project Portfolio Management System (Project Gateway) containing all project and

BAU operational schedules (MS-Project and PMW) as well as timesheets; and

- Manually collected data such as Function Points and Service Availability.

Collecting data manually or receiving self-evaluated, sometimes anecdotal data, risks reporting of

“engineered” results and introduces delays in production of reports - few people choose to represent

their performance indicators in a bad light.

Manual capture of some data cannot be avoided, such as Function Points to size systems for

productivity measures. The importance here is to ensure transparency and auditability of the data

collected.

Suncorp introduced an IT Data Mart to collate information across its IT management systems and

make it available to all IT staff for query, reporting and metrics.

Whatever the source of data, Suncorp IT Metrics found it imperative to be transparent about the

origin, derivation, calculations and evaluation thresholds of data and to expose the underlying data

for each reported measure.

Initially, the metrics definition that was provided through detailed documentation of each metric

was too detailed and technical for senior management. A Metrics Overview register was

subsequently established aimed at senior management, documenting objectives, ownership, source

and meaning of each metric and a cross reference to which reports and scorecards they appear on .

ISO 15939 is now being implemented for definition of metrics.

3.4

Step 4 – Pilot

During FY 2002/3 a pilot Balanced Scorecard for IT as a Business Unit was produced monthly for

the IT General Executive.

The delivery vehicle for the initial scorecard was Excel spreadsheets with linkages to

worksheets/books containing underlying data (where practical). The benefit of this approach was

rapid prototyping and delivery, graphical display alongside data, formatting and grouping of data

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 6

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

and the absence of high-tech gadgetry that could take the focus off the information being presented

(even senior management are familiar with Excel). Pivot Tables challenged some but was overcome

to a degree by training of users, however they are extremely useful for presenting the underlying

data. The down side is the manual intensive nature of scorecard production with drill through.

The use of such a simple tool was seen as a tactical move to delivering information rapidly and

simply - plenty of time to move to more sophisticated tools later.

Reviews during the year with senior management refined the metrics and added:

• perspective to each metric in terms of accompanying volumetric information such as

number of Help Desk Calls, number of Sev 1 problems, number of projects completed;

• YTD column – some as an annualised projection, mostly as averages YTD;

• Benchmark column – documenting industry performance benchmarks - alongside the

Suncorp Target column; and

• data quality rating for each metric.

4.

First Lessons

Lesson 1 – Choosing Metrics that mean something and add value are not easy. Don’t fall for the

trap of choosing things that are “interesting” – they must drive action and be relevant to business

and IT. The major issues that surfaced during the pilot were that the structure and metrics initially

chosen were seen as IT-only measures, not able to be used/related to the Business, nor easily and

overtly linked to IT and Business strategy.

Lesson 2 - Choosing Metrics is easy compared to Data Collection. Don’t choose metrics you can’t

reliably collect – introduce them later when you can. No-one wants a scorecard full of “TBA”

measures nor measures where poor data quality makes them unusable.

Lesson 3 – Targets should be challenging but attainable, otherwise it will be a “sea of Red”. Don’t

set all targets at the industry benchmark as your organisation may be developing processes towards

that point but not be there yet. Too much red causes dysfunctional behaviour and a culture of just

changing targets or metrics because of colour. Remember – colour highlights performance issues

and if linked to KPI’s it could mean bonuses might be at risk.

Lesson 4 – Ownership of issues will be unclear and the focus of debate when measures are

averaged across divisions and departments without transparency on how they were derived and who

contributed what to which. First questions will be – what caused this and who’s responsible for

fixing it?

Lesson 5 – Be flexible, but guiding. Use an iterative prototyping method with users to establish the

scorecards using low-tech tools – model with them and be flexible or they won’t use it. Don’t be a

stickler for “strategic” or “outcome” metrics when it com es to what they want to measure – let them

have operational measures if they wish but guide them.

Initially the scorecard o wner will have a lot to deal with that is new, incl uding:

- the scorecard concept itself;

- being measured objectively against KPIs;

- transparency of their performance and direct visibility to others (red is not the most

desirable colour);

- wide-ranging measures – not just managing budgets and staff numbers; and

- poor quality of their own data driven by inconsistent application of processes / standards.

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 7

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

Your first attempt needs to have a soft landing or you won’t get another ri de. There is no “perfect

10” for IT Balanced Scorecards.

5.

Take II – Contemporary Model

A major review of the pilot year was conducted 9 months in as this coincided with the latter stages

of business planning for the new financial year.

Feedback during the review highlighted the main avenues for enhancing value from scorecards

going forward were:

• the structure and metric groups needed to be easily and overtly linked to new Business

strategy and IT business plans; and

• the metrics themselves needed to include more that were useable in a business context to

demonstrate the value of IT to the Business in terms it understood (ie. IT needed to

blend in some metrics to market the good news not just highlight issues).

There was also a new challenge – the scorecard was to be rolled out to the next 2 levels of IT

management – 4 General Managers of IT Divisions (Applications, Infrastructure, Strategy &

Architecture, Client & Internal Services) and 18 Departmental Managers (eg. Banking Systems,

General Insurance Systems, Service Desk, Mainframe Infrastructure etc), and it was to be used as

one input to KPI assessments for the year.

The push of scorecards to lower levels emphasised the need to reflect the individual divisional and

departmental business plans and operational goals for each scorecard owner.

During strategic planning for the Group, Suncorp devised a new Business Vision – to become the

most desirable financial services company in Australia, for:

• Customers to do Business with;

• Our Employees to Work for;

• The Community to be Associated with;

• Shareholders to Invest in.

All business planning across the group was to be linked to these 4 strategic themes and as these

themes appeared similar in alignment to Kaplan & Norton’s perspectives and supported the

principle behind their model for scorecards (ie. to broaden th e base of measures) it seemed a logical

step to restructure scorecards along these lines.

Therefore the new IT scorecards at each level would have a 1

st

level “strategic theme” structure of:

• Customers – external customer value and enhancing the customer experience;

• Employees – engagement of employees;

• Community – contribution to the community; and

• Shareholders – enhancing value through operational efficiency and effectiveness.

Next step was to engage all stakeholders during their business planning to identify metrics that

related to outcome measures for initiatives and operational goals. Business planning at a conceptual

level involved strategic conversations covering the following topics:

A – Where are we now

B – Where do we want to be

C – What do we do to get there

D – How do we make it happen

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 8

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

5.1

Metrics Review

The main objectives of the review of metrics was to:

• Ensure match to new groupings for driving of the owner’s business initiatives and

operational goals;

• Hierarchically link to their manager’s metrics;

• Introduce more business value based metrics to make the scorecard more useable with

the Business;

• Set targets appropriate to thei r division / department and process maturity, rather than

Industry Benchmarks.

In doing so, reference was made to a number of works, including a paper on IT Balanced

Scorecards from the Working Council for Chief Information Officers 2003 [2]. This paper provided

validation of much of what we were doing and also additional options and insights for

consideration.

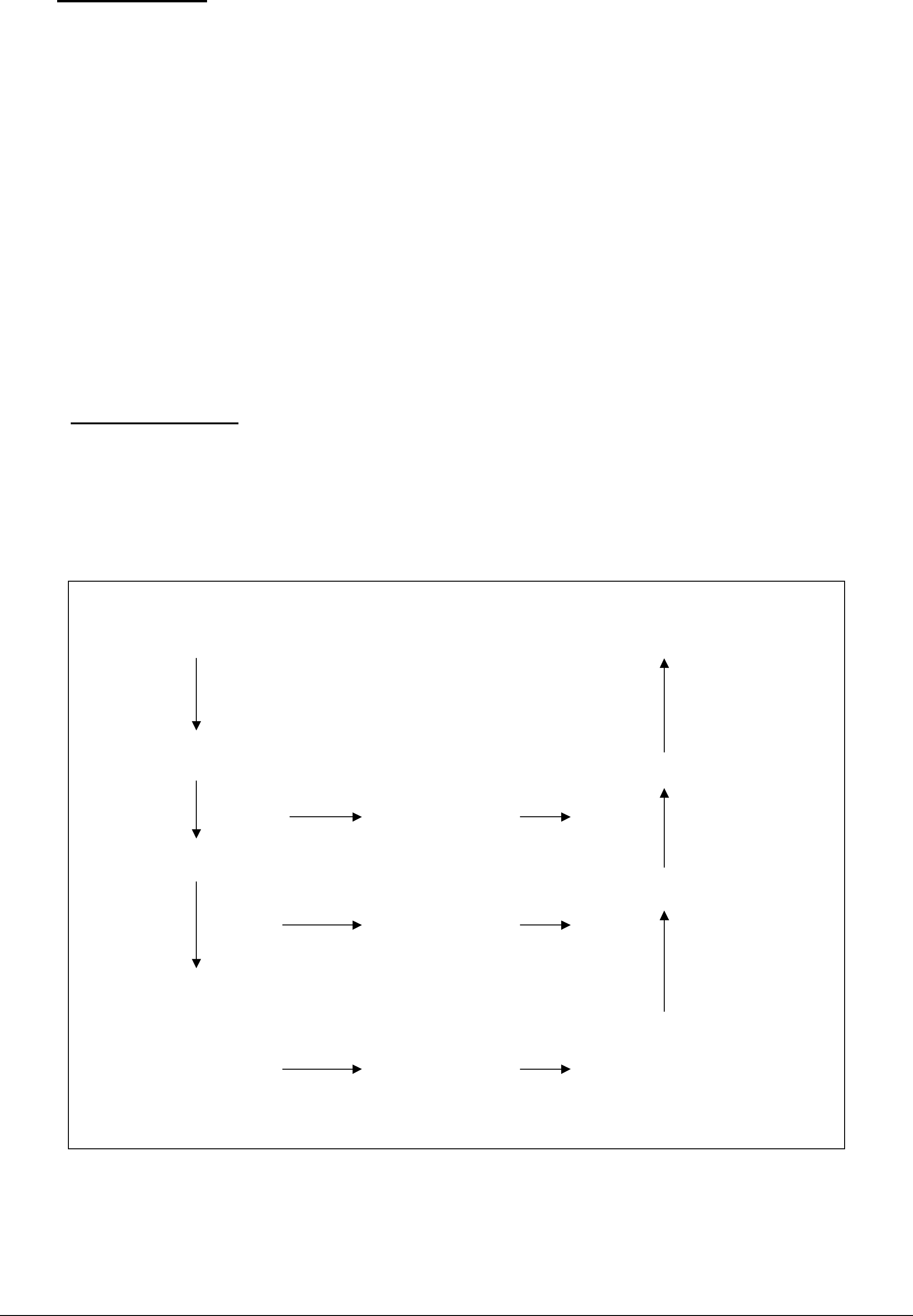

5.1.1 Metrics Linkages

With each manager developing a business plan at their level of the organisation that was

hierarchically linked to their manager’s business plan, it seemed logical that the metrics based on

outcomes would also link hierarchically – from GE of IT to her GM’s and from each of those GM’s

to their Managers, as per Figure 3.

Corpor ate Strategy

GE Planning

GM Planning

Manager Planning

Customers to do business with

Our Employees to work for

Community to be associated with

Shareholders to invest in

A

B

C

D

A

B

C

D

A

B

C

D

Group Balanced SC

GE Bal SC

GM Bal SC

Manager Bal SC

St atus of B’s and

Output s from C / D’s

Metrics

Metrics

Metrics

Customers

Our Employees

Community

Shareholders

Customers

Our Employees

Community

Shareholders

Customers

Our Employees

Community

Shareholders

Customers

Our Employees

Community

Shareholders

Metrics

St atus of B’s and

Output s from C / D’s

St atus of B’s and

Output s from C / D’s

Figure 3 – Linkage of Business Plans to Scorecard Metrics

The consulting sessions on metrics during business planning were invaluable means to engage

stakeholders and assist their understanding of scorecards and metrics, and for the metrics team to

better understand the managers business drivers. It built relationships.

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 9

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

Business plans largely showed the grouping of goals and operational objectives within strategic

themes. These goals and operational objectives would naturally flow into the grouping of metrics

that supported tracking and performance of them. Therefore metrics were now explicitly linked to

business plans and to organisational strategy.

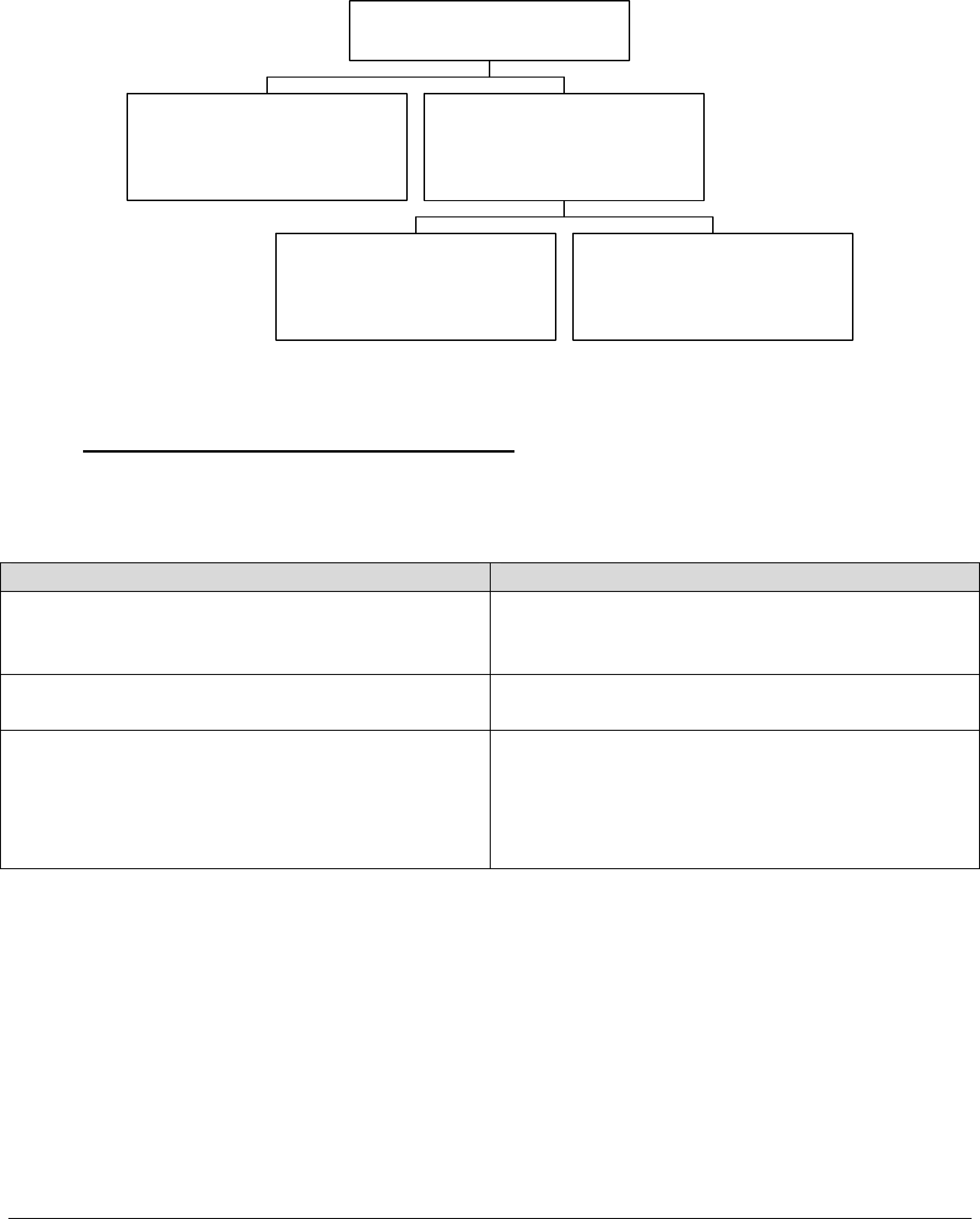

In linking metrics hierarchically, there was either a 1 to 1 relationship or 1 to many, as shown in the

example in Figure 4 below.

GM - Infr astr uc ture

Av ailabilit y of Mainf rame

Midrange & Ne tworks

f or C rit ic al Syst ems

Manager - Ba nking Systems

Retail Ba nking Apps Avail.

Busin es s Banki ng A pps Av ail.

E xterna l Interfa ce Apps Avail.

Manage r - Insurance Systems

Su ncorp GI A pp s Av ail

GI O GI A pps Av ail

R eceipting Apps Avail

GM - Applic at ions

Av ailabilit y of

C rit ic al Applic at ions

G E Me tric

Av ailability of C rit ic al Syst em s

Figure 4 – example of hierarchically linked metrics

5.1.2

Business Centric Metrics and Fine-Tuning

In making metrics more business-value based, some of the IT centric metrics were altered, for

example:

Pilot Metric Revised Metric(s)

Service Request provided by Date Required % SLA’s Met

Avg Days Lead time on Service Requests

Avg Days delivery of Laptops and Desktops

Customer Satisfaction (of internal business

customers)

Survey on IT alignment to Business

TCO vs Benchmark % Effort on Enhancements vs Total (shows time

spent on business enhancements which is valued

by the Business v Fail and Fix effort which is

not valued by business)

% Effort on Overheads vs Total

Other metrics were removed or altered because they were causing undesirable behaviour. For

example, “Fixed on First Contact” was timed-off at 30 minutes, causing Service Desk operators to

extend call time until all avenues for resolu tion at Service Desk were exha usted or the 30-minute

mark was reached. This had the effect of dropping Service Levels significantly as operators were

not as available to take incoming calls.

New metrics were added in relation to security and disaster recovery capability, for example:

• %Security Policies Developed v IT Framework

• %Security Patches Installed v Available

• #Virus Intrusions not intercepted

• %DR Tests Completed Successfully v Planned

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 10

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.

5.2

Live Pilot - II

With new targets negotiated, new metrics agreed and documented, the framework was in place for a

challenging year for the IT Metrics team . The outcome since this change to a more contemporary

model was the use of scorecards in the FY 2003/4 annual assessments of all IT management staff.

Feedback on the whole was very positive and use of the scorecards throughout the year to initiate

action for addressing issues highlighted and then track the impact of those actions gathered

momentum.

Several managers that were not across the pilot scorecard developed “red light fever” – an aversion

to anything red on their scorecard and a realisation that scorecards are not all-good news. The

degree of the reaction may be less in some organisations than others depending on organisational

culture in respect of dealing with issues, and the sense of community within the organisation when

dealing with problems.

Challenges were made on data quality, the relevance of metrics (in most instances that they chose),

and in the agreed targets. Clearly there was a lifecycle to how management took on, owned and

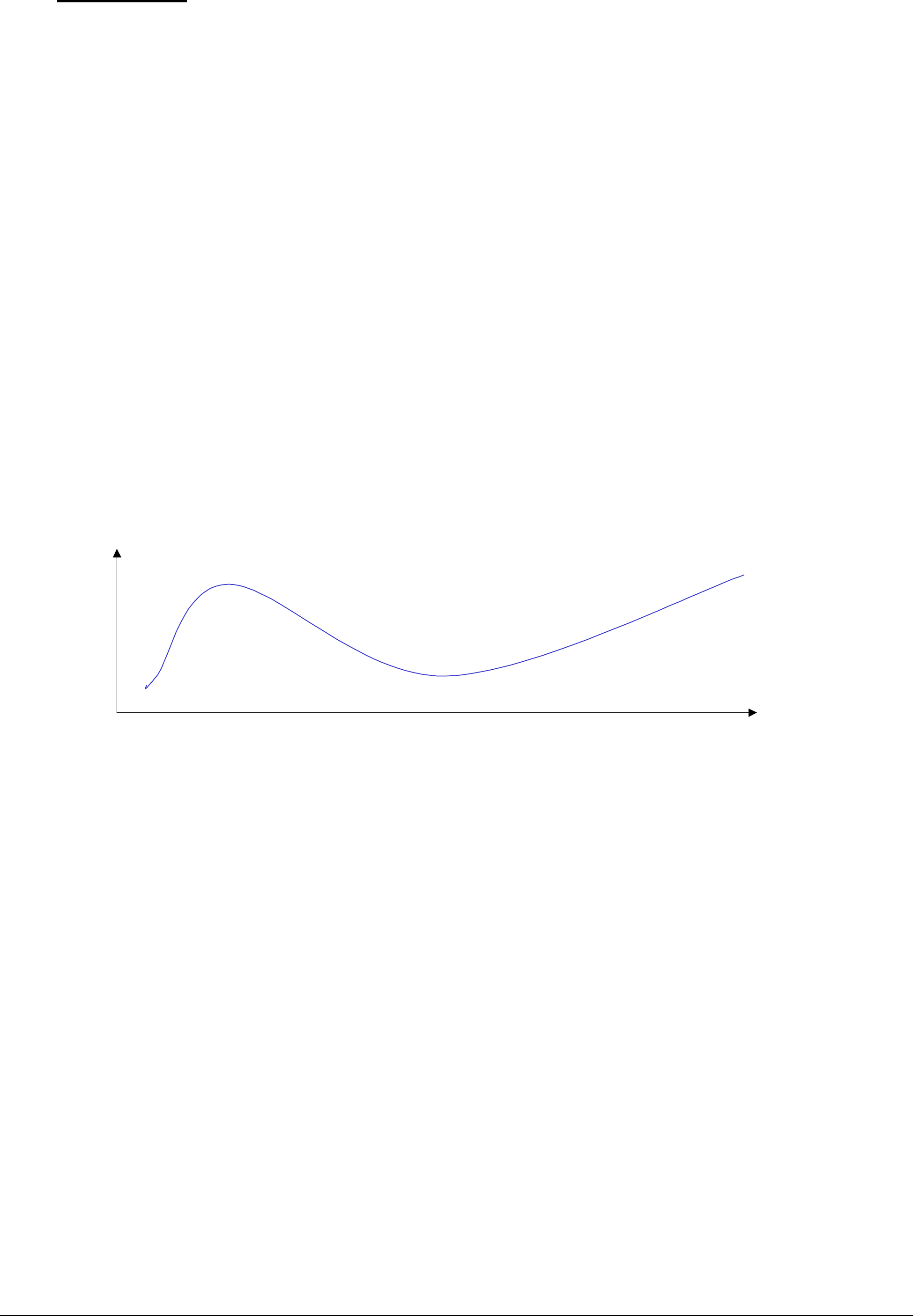

accepted being measured via this means. Figure 5 below provides a model of this lifecycle of

acceptance at Suncorp.

Time

Satisfaction

Initial

Promise

Realisation -

not a l l good news

Denial /

Discredit

Change Metrics

Or Targets

Accept and

Action

Realise

Value

Figure 5 – Scorecard Acceptance Lifecycle

In delivery terms, to produce over 20 scorecards per month from a small team whose framework

was based on Excel meant sacrifices in what could be delivered. The casualties included detailed

analysis and commentary on trends, and drill down on data.

To address this gap an initiative is underway to implement a Management Information System

across the IT Metrics Data Mart to allow automated production of scorecards through Cognos

Metrics Manager and drill down OLAP analysis using PowerPlay. This deployment will be

essential in assisting managers through the denial / discredit stages and by exposing the data to them

will ensure they take ownership of data quality and root cause analysis that will lead to ultimate

acceptance and action of issues highlighted by the scorecard measures.

IT Balanced Scorecards – Suncorp’s journey to a contemporary model Page 11

Author: Ian Ashley. IT Metrics & Reporting, Suncorp Limited.